Last autumn, Jered “Threatin” Eames staged the most alienating, least explicable rock tour stunt since the Sex Pistols hit the deep south. He recently broke his silence.

“The Great Heavy Metal Hoax”

by David Kushner

Rolling Stone

December 14, 2018

In November, managers of rock clubs across the United Kingdom began sharing the same weird tale. A pop-metal performer, Threatin, had rented their clubs for his 10-city European tour. Club owners had never heard of the act when a booking agent approached them promising packed houses. Threatin had fervent followers, effusive likes, rows of adoring comments under his YouTube concert videos, which showed him windmilling before a sea of fans. Websites for the record label, managers and a public-relations company who represented Threatin added to his legitimacy. Threatin’s Facebook page teemed with hundreds of fans who had RSVP’d for his European jaunt, which was supporting his album, Breaking the World.

In November, managers of rock clubs across the United Kingdom began sharing the same weird tale. A pop-metal performer, Threatin, had rented their clubs for his 10-city European tour. Club owners had never heard of the act when a booking agent approached them promising packed houses. Threatin had fervent followers, effusive likes, rows of adoring comments under his YouTube concert videos, which showed him windmilling before a sea of fans. Websites for the record label, managers and a public-relations company who represented Threatin added to his legitimacy. Threatin’s Facebook page teemed with hundreds of fans who had RSVP’d for his European jaunt, which was supporting his album, Breaking the World.

But despite all the hype, almost no one came to the shows. It was just Threatin and his three-piece band onstage, and his wife, Kelsey, filming him from the empty floor. And yet Threatin didn’t seem to care — he just ripped through a set as if there was a full house. When confronted by confused club owners, Threatin just shrugged, blaming the lack of audience on bad promotion. “It was clear that something weird was happening,” says Jonathan “Minty” Minto, who was bartending the night Threatin played at the Exchange, a Bristol club, “but we didn’t realize how weird.” Intrigued, Minto and his friends started poking around Threatin’s Facebook page, only to find that most of the fans lived in Brazil. “The more we clicked,” says Minto, “the more apparent it became that every single attendee was bogus.”

It all turned out to be fake: The websites, the record label, the PR company, the management company, all traced back to the same GoDaddy account. The throngs of fans in Threatin’s concert videos were stock footage. The promised RSVPs never appeared. When word spread of Threatin’s apparent deception, club owners were perplexed: Why would someone go to such lengths just to play to empty rooms? Read more.

How much of the internet is fake? Studies generally suggest that, year after year, less than 60 percent of web traffic is human; some years, according to some researchers, a healthy majority of it is bot. For a period of time in 2013, the Times reported this year, a full half of YouTube traffic was “bots masquerading as people,” a portion so high that employees feared an inflection point after which YouTube’s systems for detecting fraudulent traffic would begin to regard bot traffic as real and human traffic as fake. They called this hypothetical event “the Inversion.”

How much of the internet is fake? Studies generally suggest that, year after year, less than 60 percent of web traffic is human; some years, according to some researchers, a healthy majority of it is bot. For a period of time in 2013, the Times reported this year, a full half of YouTube traffic was “bots masquerading as people,” a portion so high that employees feared an inflection point after which YouTube’s systems for detecting fraudulent traffic would begin to regard bot traffic as real and human traffic as fake. They called this hypothetical event “the Inversion.” Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology.

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology. Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had.

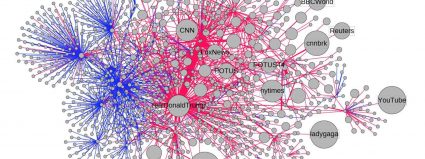

Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had. In mid-2016,

In mid-2016,