In a brilliant and dizzying end-of-year rant, Max Read takes stock of how much of our digital world is constructed from weapons-grade fraud, deception, nonsense, hokum, and miscellaneous bullshit.

“How Much of the Internet is Fake? Turns Out, a Lot of It, Actually”

by Max Read

New York Intelligencer

December 26, 2018

How much of the internet is fake? Studies generally suggest that, year after year, less than 60 percent of web traffic is human; some years, according to some researchers, a healthy majority of it is bot. For a period of time in 2013, the Times reported this year, a full half of YouTube traffic was “bots masquerading as people,” a portion so high that employees feared an inflection point after which YouTube’s systems for detecting fraudulent traffic would begin to regard bot traffic as real and human traffic as fake. They called this hypothetical event “the Inversion.”

How much of the internet is fake? Studies generally suggest that, year after year, less than 60 percent of web traffic is human; some years, according to some researchers, a healthy majority of it is bot. For a period of time in 2013, the Times reported this year, a full half of YouTube traffic was “bots masquerading as people,” a portion so high that employees feared an inflection point after which YouTube’s systems for detecting fraudulent traffic would begin to regard bot traffic as real and human traffic as fake. They called this hypothetical event “the Inversion.”

In the future, when I look back from the high-tech gamer jail in which President PewDiePie will have imprisoned me, I will remember 2018 as the year the internet passed the Inversion, not in some strict numerical sense, since bots already outnumber humans online more years than not, but in the perceptual sense. The internet has always played host in its dark corners to schools of catfish and embassies of Nigerian princes, but that darkness now pervades its every aspect: Everything that once seemed definitively and unquestionably real now seems slightly fake; everything that once seemed slightly fake now has the power and presence of the real. The “fakeness” of the post-Inversion internet is less a calculable falsehood and more a particular quality of experience — the uncanny sense that what you encounter online is not “real” but is also undeniably not “fake,” and indeed may be both at once, or in succession, as you turn it over in your head. Read more.

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology.

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology. Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had.

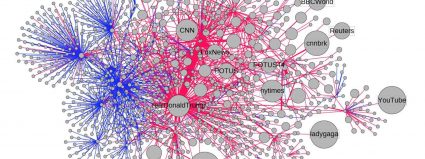

Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had.