On the topic of our tenuous collective relationship with the concept formerly known as “truth,” this examination of “deep fakes,” high-tech simulated video recordings of people you recognize doing things they’ve never actually done, may be the most frightening and portentous emerging story of 2018. And that’s saying a mouthful.

“You thought fake news was bad? Deep fakes are where truth goes to die”

by Oscar Schwartz

November 12, 2018

The Guardian

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology.

Fake videos can now be created using a machine learning technique called a “generative adversarial network”, or a GAN. A graduate student, Ian Goodfellow, invented GANs in 2014 as a way to algorithmically generate new types of data out of existing data sets. For instance, a GAN can look at thousands of photos of Barack Obama, and then produce a new photo that approximates those photos without being an exact copy of any one of them, as if it has come up with an entirely new portrait of the former president not yet taken. GANs might also be used to generate new audio from existing audio, or new text from existing text – it is a multi-use technology.

The use of this machine learning technique was mostly limited to the AI research community until late 2017, when a Reddit user who went by the moniker “Deepfakes” – a portmanteau of “deep learning” and “fake” – started posting digitally altered pornographic videos. He was building GANs using TensorFlow, Google’s free open source machine learning software, to superimpose celebrities’ faces on the bodies of women in pornographic movies.

A number of media outlets reported on the porn videos, which became known as “deep fakes”. In response, Reddit banned them for violating the site’s content policy against involuntary pornography. By this stage, however, the creator of the videos had released FakeApp, an easy-to-use platform for making forged media. The free software effectively democratized the power of GANs. Suddenly, anyone with access to the internet and pictures of a person’s face could generate their own deep fake. Read more.

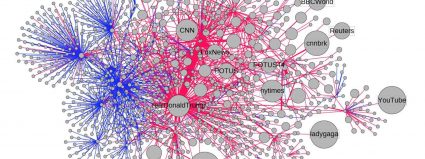

Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had.

Aside from their role in amplifying the reach of misinformation, bots also play a critical role in getting it off the ground in the first place. According to the study, bots were likely to amplify false tweets right after they were posted, before they went viral. Then users shared them because it looked like a lot of people already had.